7 MINUTE READ

Credit Agreement Analysis with CreditSeer

Enabling 50% faster, safer decisions by designing source-grounded extraction, AI guardrails, and failure-aware components

CONTEXT

CreditSeer is an AI-powered credit analysis tool built to help loan officers at regional banks and credit unions review syndicated loan agreements. Developed as part of Georgia Tech's Financial Services Innovation Lab, I led the product from early concept through to a working MVP that was tested with credit analysts across 15+ real credit agreements

TYPE

FinTech

B2B2C

Responsible AI

B2B2C

Responsible AI

ROLE

Product Designer (AI Systems)

DURATION

6 months

TEAM

Product Manager

2 ML Engineers

1 Frontend Developer

2 ML Engineers

1 Frontend Developer

PROBLEM

Credit analysts spend 2+ hours on credit agreement review....

Critical details are scattered

Reviewing credit agreements manually is slow because analysts must cross-reference key details scattered across hundreds of pages to understand a single credit facility.

....yet most LLMs aren't trustworthy enough for high-stakes decisions

Hallucinate values, creating regulatory risk

AI promises to solve the speed issue but a single extraction error can invalidate a financial recommendation and lead to regulatory failure.

Insight generation without transparency

Most LLMs prioritize "insight generation" (summarizing and interpreting), which lacks the transparency required for regulated finance. What loan officers actually need is defensible, auditable decision support, not an AI that tells them what to think.

SOLUTION

CreditSeer is an AI-assisted system that parses complex credit agreements into a structured, explainable dashboard, enabling faster review without sacrificing trust.

Reorganizing Complex Agreements Around Analyst Workflows

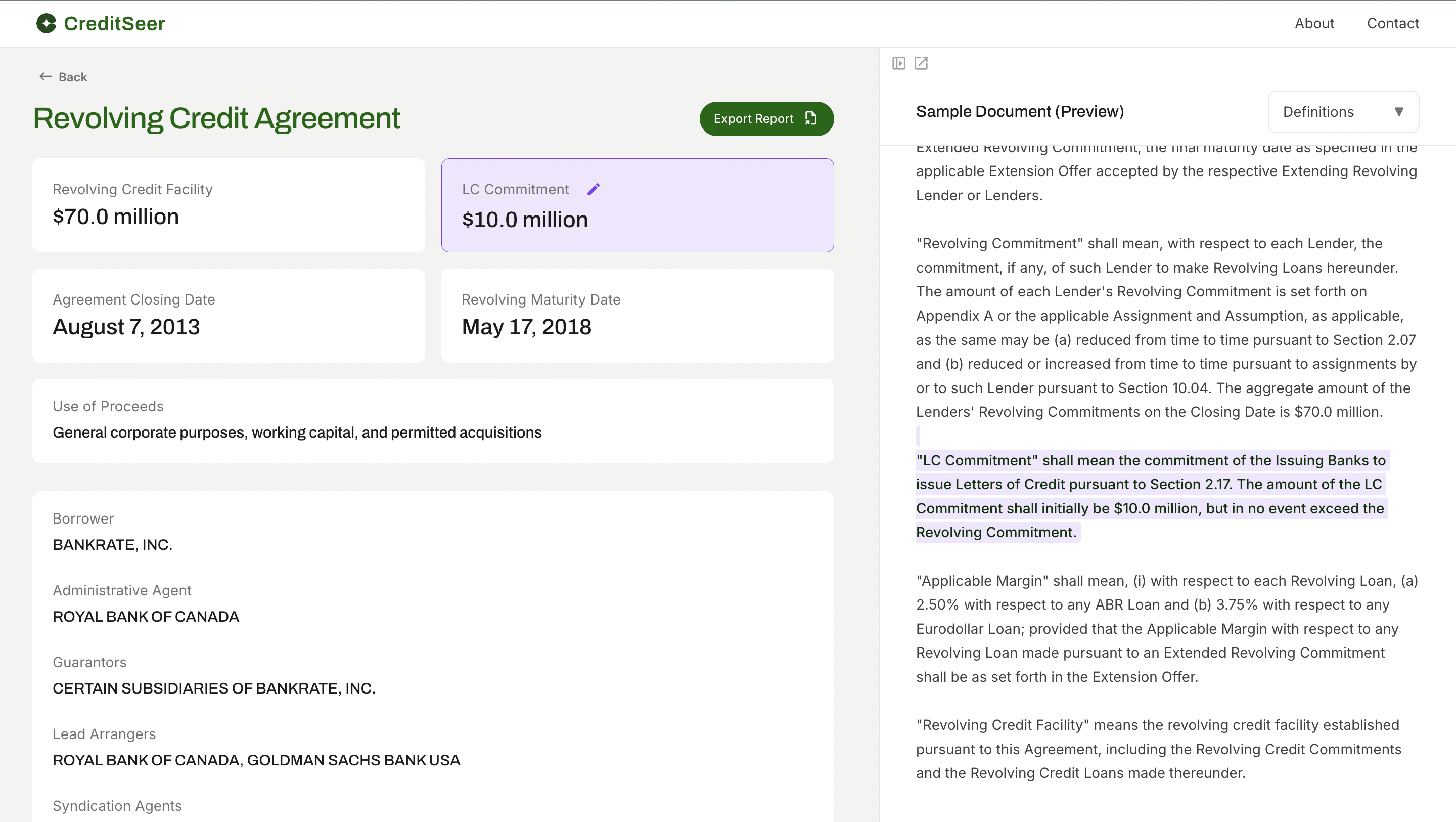

CreditSeer focuses on extracting predefined, high-value financial and legal terms that analysts actually review (e.g., commitments, pricing, covenants, default triggers) and rearranges them into analyst-aligned views.

Why this matters: This reassembly reduces the cognitive load + time caused by constant cross-referencing

Workflow Aligned

Faster review

Low cognitive load

Making Every Extracted Value Explainable

Every extracted value is directly linked to its source clause in the agreement, allowing analysts to verify accuracy instantly without manual cross-referencing.

Why this matters: This transforms the tool from a black box into a transparent auditable decision making assistant

Why this matters: This transforms the tool from a black box into a transparent auditable decision making assistant

Instant verification

Audit-ready

Trust through traceability

Degrading Gracefully When the Model Is Uncertain

The dashboard clearly surfaces ambiguous states and allows analysts to correct values, with each intervention feeding back into pipeline refinement, schema updates, and future annotation strategies.

Why this matters: This builds more trust than false confidence creating a feedback loop for model improvement

Why this matters: This builds more trust than false confidence creating a feedback loop for model improvement

Safe failure

Human-in-control

Pipeline Refinement

IMPLEMENTATION HIGHLIGHTS

Leading Cross-Functional Collaboration

What I did: Helped ML engineers define annotation schemas and informs features through analysis of 15+ agreements so that model behavior aligned with how analysts actually review and verify terms.

Impact: This prevented the team from wasting time on unreliably extractable fields and helped set realistic expectations with stakeholders about what the MVP could deliver.

Impact: This prevented the team from wasting time on unreliably extractable fields and helped set realistic expectations with stakeholders about what the MVP could deliver.

Designing an End-to-End Extraction Pipeline

What I did: Architected and implemented the document chunking logic and two-stage extraction pipeline (block discovery + value parsing), working directly in code to test what actually worked vs. what looked good on paper.

Impact: By building the pipeline myself, I could rapidly test extraction patterns, identify failure modes, and iterate on prompt structures within hours instead of waiting days for engineering cycles. It revealed critical insights about context window, chunk boundary and tokens issues that would have been invisible from mockups alone.

Impact: By building the pipeline myself, I could rapidly test extraction patterns, identify failure modes, and iterate on prompt structures within hours instead of waiting days for engineering cycles. It revealed critical insights about context window, chunk boundary and tokens issues that would have been invisible from mockups alone.

Building UI System for Uncertain AI Outputs

What I did: Built a functional React-based MVP using Cursor.ai, Figma, and Vercel rather than creating high-fidelity static mockups and created a data-driven design system with components built to handle all AI output states, not just the happy path.

Impact: This approach cut design-to-feedback cycles from weeks to days. Instead of designing for hypothetical "perfect" AI outputs, I could see actual failure modes (blank fields, overlong values, low-confidence extractions) and design appropriate handling patterns immediately.

Impact: This approach cut design-to-feedback cycles from weeks to days. Instead of designing for hypothetical "perfect" AI outputs, I could see actual failure modes (blank fields, overlong values, low-confidence extractions) and design appropriate handling patterns immediately.

DEEP DIVE INTO THE EXTRACTION PIPELINE

When early model extraction outputs broke review workflows, I developed a pipeline to understand what GenAI could reliably extract and shape a UX grounded in what analysts could actually trust.

In earlier attempts we extracted structured values directly from large, article-level chunks (often 3,000-5,000 tokens).

The prompt essentially asked: "Extract the base rate, applicable margin, commitment fee, and utilization fee."

Issues:

The prompt essentially asked: "Extract the base rate, applicable margin, commitment fee, and utilization fee."

Issues:

Some fields were blank

Few returned clause-length text instead of a value

Some values were confidently wrong with no clean trace to source

The dashboard became visually inconsistent and hard to scan

This wasn’t just a model issue — it became a product reliability issue.

• Design impact: cards looked empty or visually messy

• Workflow impact: analysts couldn’t quickly verify or compare

• System impact: errors were hard to debug (chunk? block? field?)

• Design impact: cards looked empty or visually messy

• Workflow impact: analysts couldn’t quickly verify or compare

• System impact: errors were hard to debug (chunk? block? field?)

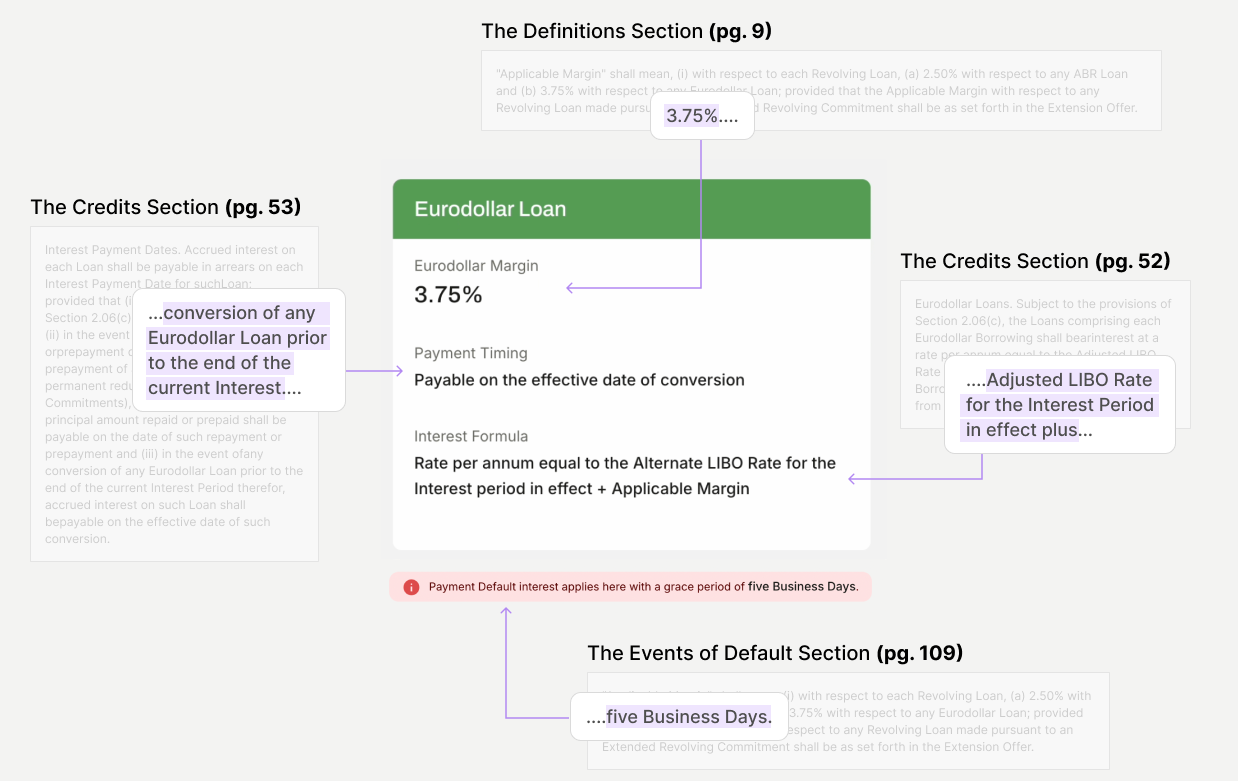

I split extraction into block discovery and value parsing to constrain context, reduce ambiguity, and produce schema-aligned outputs.

I split extraction into two sequential, focused steps with different objectives and constraints

Stage 1 - Block Discovery

The prompt asks: "Find the exact block that defines the 'Applicable Margin' and return it word-for-word"

Key design decisions:

Used defined patterns for each field to guide the model

Required verbatim extraction, no interpretation

Mandated location metadata (page, section, line numbers)

Why this works:

Model can't hallucinate when it's required to quote directly

Every block has a documented source location

If extraction fails, we can examine the retrieved block to see if it's even the right content

The prompt asks: "Find the exact block that defines the 'Applicable Margin' and return it word-for-word"

Key design decisions:

Used defined patterns for each field to guide the model

Required verbatim extraction, no interpretation

Mandated location metadata (page, section, line numbers)

Why this works:

Model can't hallucinate when it's required to quote directly

Every block has a documented source location

If extraction fails, we can examine the retrieved block to see if it's even the right content

Stage 2 - Value Extraction

The prompt asks: "From this block about 'Applicable Margin', extract: (1) base value, (2) adjustment trigger"

Key design decisions:

Extremely constrained context only from the relevant block

Strict schema with expected formats, units, and field types

Required "ambigous" markers when uncertain

Why this works:

Smaller context + strict schema = consistent output

Known output structure means components can be designed with confidence

Values are formatted for UI display, not as paragraphs

The prompt asks: "From this block about 'Applicable Margin', extract: (1) base value, (2) adjustment trigger"

Key design decisions:

Extremely constrained context only from the relevant block

Strict schema with expected formats, units, and field types

Required "ambigous" markers when uncertain

Why this works:

Smaller context + strict schema = consistent output

Known output structure means components can be designed with confidence

Values are formatted for UI display, not as paragraphs

This Architectural Change fundamentally changed what the product could do:

For the design:

UI components could now be designed with predictable data shapes, eliminating the "messy card" problem and enabling the consistent, scannable dashboard analysts needed.

For trust:

The two-stage approach made the model's reasoning visible, analysts could see both the extracted value AND the source block it came from, building confidence through transparency.

For debugging:

When something went wrong, the team could now isolate the issue was block discovery (Stage 1) or value parsing (Stage 2), dramatically speeding up iteration cycles.

For ML improvement:

The intermediate block artifacts created a natural annotation dataset. When analysts corrected values, we could trace back to see if the wrong block was retrieved or if parsing was the issue, directly informing model retraining priorities.

IMPACT

~50% reduction in review time

Reduced end-to-end agreement review from ~2 hours to ~1 hour by reorganizing fragmented terms into workflow-aligned views (e.g., pricing, covenants, triggers).

Helped Boost model accuracy

Achieved through product-led iteration: redesigning extraction logic, tightening schemas, and identifying edge cases across 15+ real credit agreements.

Cut Design–engineering–Feedback time from weeks to days

Built and iterated on a functional React-based MVP using Cursor.ai and rapid prototyping tools, allowing real model outputs to directly inform design decisions.

Built Shared Design Infrastructure for the Lab

Created a scalable, data-driven design system and supporting documentation (including financial concepts) that serve as a reference for future projects and improve onboarding across all teams

REFLECTION

AI Tools Pushed Me Beyond UX Into System Design

Using AI tools like Cursor helped me move beyond just designing screens. I ended up building parts of the extraction logic as well, which changed how I think about the role of a product designer—as someone who shapes system behavior, not just interfaces.

Understanding the Domain Was Necessary to Simplify It

To design for credit analysts, I had to deeply understand how syndicated credit agreements work. Learning the domain was key to turning dense, fragmented information into something usable and coherent.

Early Access to Users and Data Matters More Than Process

Working in fintech made it clear that limited access to real users and agreements can slow down the right decisions. In trust-critical domains, getting early exposure to real data and analyst workflows is essential to designing something reliable.

Designing for Uncertainty Is Part of Designing for AI

This project reinforced that AI won’t always be right, especially in high-stakes workflows. Making uncertainty visible and designing clear fallback paths helped keep the product usable and trustworthy.